Generative Music: When Music Evolves from Works into Systems

For more than a century, music has largely been understood as a finished artifact: a composition written, recorded, and distributed as a fixed piece of content.

Generative Music challenges this assumption.

Instead of treating music as a static work, Generative Music treats music as a living system — one that continuously produces sound through algorithms, rules, and models.

In this new paradigm, composers no longer design every note.

They design the process that creates the notes.

Music becomes something that runs, not something that merely plays.

What Is Generative Music?

Generative Music refers to music that is created autonomously by computational systems based on predefined rules, probabilistic processes, or machine-learning models.

Rather than storing finished tracks, a Generative Music system contains:

- Musical rules

- Structural constraints

- Parameter ranges

- Style definitions

When the system operates, it produces music in real time.

You can think of it as:

Composer → System Architect

Composition → Algorithm

Music → Runtime Output

A Brief Historical Perspective

Generative Music did not originate with modern AI.

Key milestones include:

- 1950s: Algorithmic composition experiments

- 1970s: Brian Eno formally introduces the concept of Generative Music

- 1990s: MIDI-based procedural music systems

- 2010s: Real-time synthesis engines and interactive sound design

- 2020s: Deep learning and foundation audio models

Artificial intelligence dramatically expands the expressive power of Generative Music, but the conceptual foundation predates AI.

Traditional Music vs. Generative Music

| Dimension | Traditional Music | Generative Music |

| Structure | Fixed | Dynamic |

| Duration | Finite | Potentially infinite |

| Repetition | High | Extremely low |

| Creation Method | Manual composition | Rule / model design |

| Content Storage | Files | Algorithms |

Traditional music is content-centric.

Generative music is system-centric.

Core Technologies Behind Generative Music

Rule-Based Engines

Music theory–driven constraints for harmony, melody, rhythm, and form.

Probabilistic Models

Stochastic selection of musical events using probability distributions.

Machine Learning Models

Neural networks trained on musical corpora to learn style and structure.

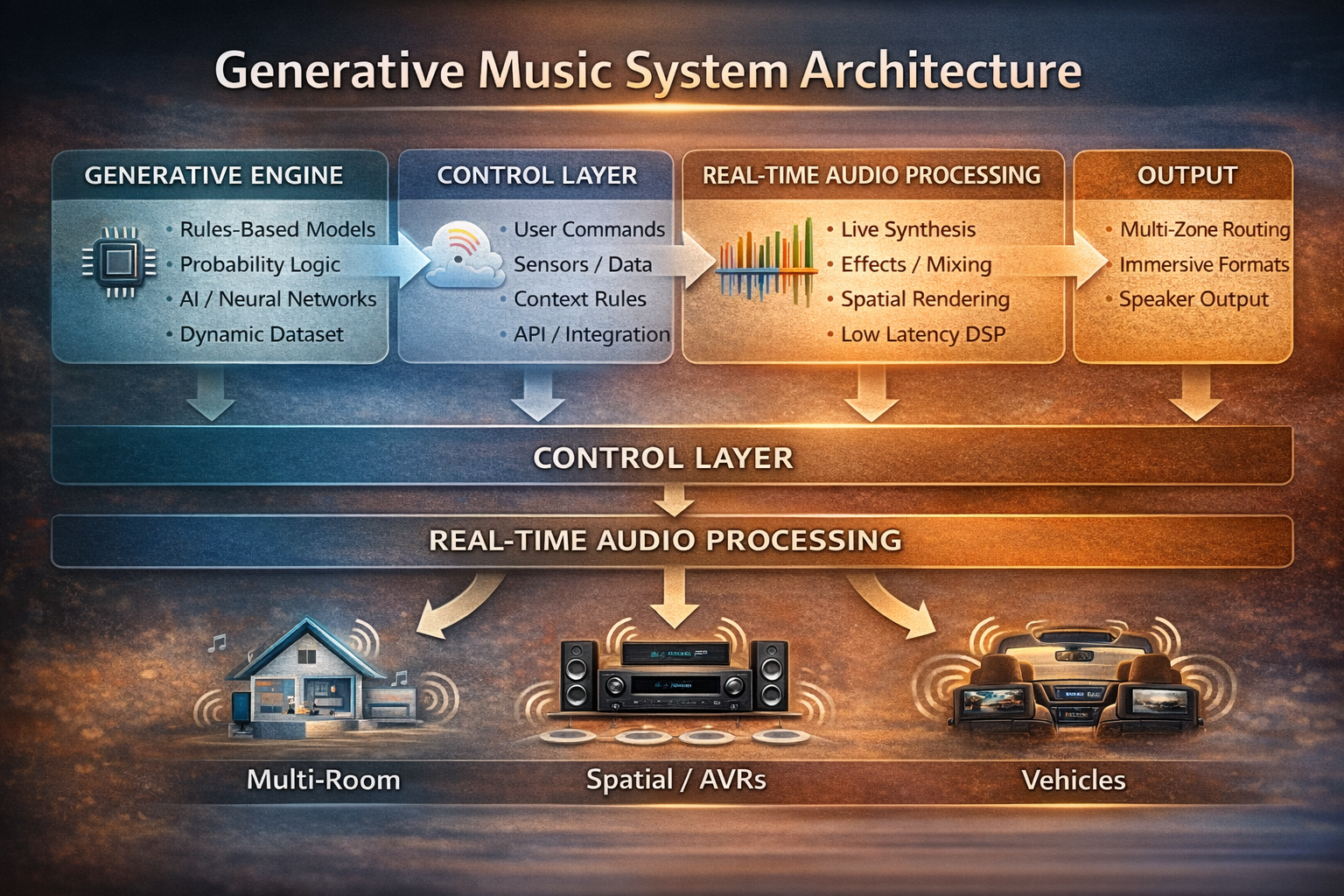

Real-Time Audio Engines

Synthesis, processing, spatialization, and mixing performed live.

All four layers often coexist inside modern systems.

Why Generative Music Matters

- Infinite Content

A single system can produce unlimited music variations without repeating.

- Reduced Listener Fatigue

Ideal for long-duration listening environments.

- Context Awareness

Music adapts to:

- Time of day

- User activity

- Location

- Mood

- Environmental sensors

- Brand Identity at the Algorithm Level

Brands define a sonic identity as a system rather than a single jingle.

How Generative Music Changes Playback

Traditional model:

Select track → Play file

Generative model:

Start engine → Music emerges

Playback becomes the act of running software, not reading data.

This shifts audio hardware from playback devices to audio computing nodes.

Home Theater Integration: Living Sound Environments

In modern home theaters, Generative Music enables:

Dynamic Pre-Movie Ambience

Soft evolving textures that adapt to lighting and time.

Adaptive Intermission Soundscapes

Music slowly morphs rather than abruptly stopping.

Room-Aware Variations

Each zone receives a related but slightly different version.

Benefits

- No playlist management

- Seamless transitions

- Always-fresh background audio

A multi-channel AVR processes:

Generative Engine → Multichannel Spatial Renderer → Power Amplification → Speakers

The home becomes a continuously evolving acoustic environment rather than a playback room.

In-Car Audio Integration: Adaptive Driving Soundtracks

Vehicles increasingly function as mobile entertainment spaces.

Generative Music allows:

Speed-Responsive Music

Tempo increases gently with vehicle speed.

Driving-Mode Profiles

- Comfort → Calm textures

- Sport → Rhythmic energy

- Eco → Minimalist tones

Route-Based Mood Shifts

Highway, city, tunnel, and countryside each influence the music.

Benefits

- Reduced repetition on long drives

- No dependency on playlists

- Emotionally aligned driving experience

Generative Music Engine → Vehicle DSP → Multi-Speaker System

The car gains a continuously adaptive soundtrack.

Relationship with Immersive Audio

Generative Music pairs naturally with spatial audio:

- Objects instead of channels

- Moving sound sources

- Height and depth modulation

Music becomes not only time-evolving but space-evolving.

Business Model Shift

Traditional:

Sell tracks, albums, licenses.

Generative:

Sell engines, systems, subscriptions, experiences.

Music becomes a service rather than a product.

Impact on Creators

Generative Music does not replace musicians.

It changes their role:

Composer → System Designer

Producer → Style Architect

Artist → Experience Curator

Human creativity moves upstream into rule creation and aesthetic direction.

Conclusion

Generative Music represents a fundamental transition:

From static works

To living systems

From repetition

To continuous evolution

From playback

To computation

The future of music is not about choosing songs.

It is about entering sound worlds.

Tom

27 Jan, 2026This is wild. Imagine a house that generates its own ambient music based on the time of day... sounds like sci-fi but it's actually happening. Super cool read!

Robert

26 Jan, 2026Fascinating read! Algorithmic sound is clearly moving from niche labs to everyday spaces. The shift from 'static tracks' to adaptive, context-aware audio could revolutionize how we design multi-zone systems, enabling each room to generate its own mood in real time. Would love to see how OpenAudio plans to integrate generative engines into HOLOWHAS routing groups for seamless whole-house adaptive playback.